From Agents to Architecture - and the Hidden Gaps Still Holding Banks Back

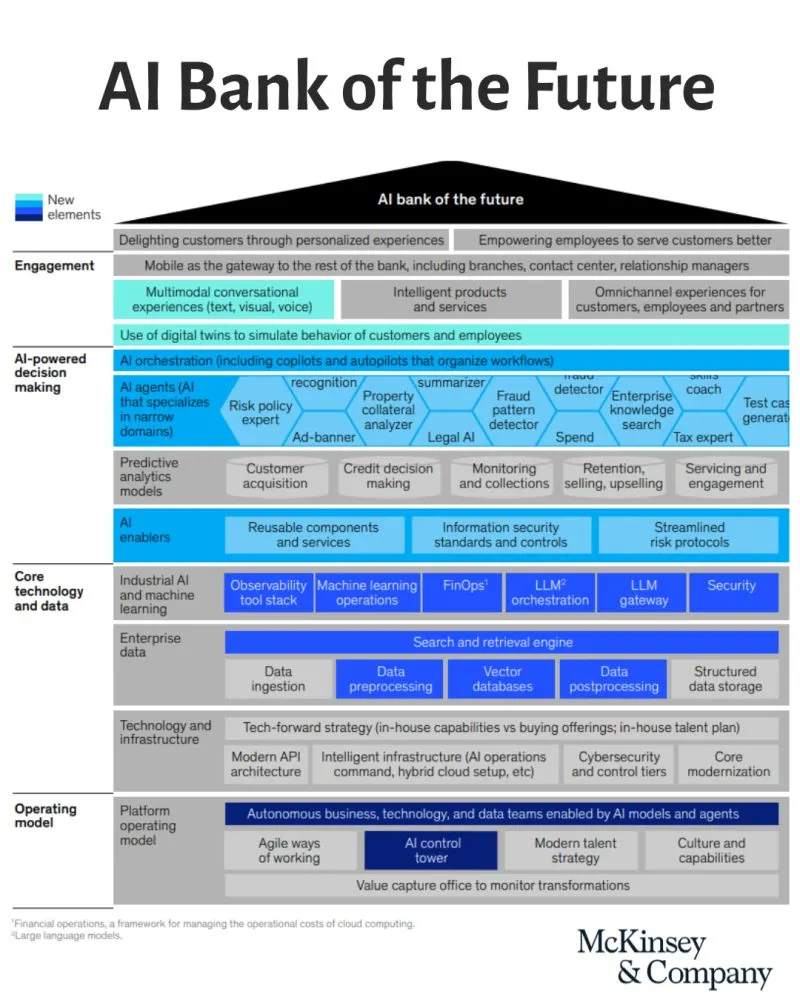

McKinsey’s AI Bank of the Future framework has been one of the most referenced models in banking transformation over the past few years. Its new update represents a significant evolution - moving from “AI as a toolkit” to “AI as the enterprise architecture.”

This is a Meaningful Shift.

It signals that we’ve entered a phase where the question is no longer how to use AI, but how to build institutions that are AI-native by design.

What’s New: From Tools to Agents, From Analytics to Orchestration

The updated McKinsey model reframes how banks should think about AI at scale, but more importantly.. what is it not saying?

From Tools to Agents

AI is moving beyond copilots and dashboards into networks of specialised agents - each designed for a specific task but coordinated by a central “AI brain.”

This concept of multi-agent orchestration allows the system to plan, route, and trigger the right models and workflows dynamically.

It marks a transition from static analytics to autonomous coordination.

From Analytics to Orchestration

Rather than focusing on single-point insights, the emphasis shifts to workflow orchestration - connecting models, APIs, and human decisions across domains. This demands new infrastructure: agent registries, policy enforcement layers, and governance hubs that ensure reliability and auditability.

From Point Solutions to Full-Stack, GenAI-Native Architecture

AI is becoming a foundational capability. That means reusable AI components, shared orchestration logic, and enterprise “AI control towers” that manage governance, lineage, and lifecycle across the portfolio. The goal is not a collection of models but a coherent, intelligent operating environment. The vision is bigger - and the pathway is more practical.

From Use Cases to Domain Rewiring

The earlier era of “AI use cases” is giving way to domain-level transformation. Instead of building scattered pilots, McKinsey advocates choosing a few high-impact domains - such as lending, onboarding, or fraud - and redesigning them end to end.

Leading banks are already applying this logic.

- A global lender restructured its credit process around AI operating squads, linking origination, risk, and operations into one orchestration layer.

- A major insurer in North America used the same approach in claims, embedding GenAI across intake, triage, and resolution.

This shift enables compound efficiency, resulting in better outcomes, faster cycles, and fewer handoffs. The impact grows not by scaling individual models, but by rewiring the enterprise value chain one domain at a time. The goal is not a collection of models but a coherent, intelligent operating environment. The vision is bigger - and the pathway is more practical.

Stronger Emphasis on Reuse and Scale

The new architecture introduces a principle of reuse before rebuild.

Reusable models, shared orchestration logic, and centralised governance frameworks reduce duplication and accelerate deployment. AI “control towers” serve as oversight mechanisms, managing model health, compliance, and lineage in real time.

It’s the same architectural maturity that cloud computing brought to infrastructure: industrialisation, standardisation, and scale.

What is it Not Saying?

The Hidden Burden on the “Grey” Areas

However, one practical challenge remains largely unspoken.

By focusing transformation on selected domains, many institutions are underestimating the load on the areas left out of scope - the “grey” boxes in the original framework.

These are the legacy operations, risk, compliance, and data teams that still have to:

- Run business as usual, often under tighter budgets.

- Integrate with new AI systems they didn’t design.

- Adapt processes and controls for emerging risks such as data leakage, lineage drift, or explainability.

Without dedicated funding or redesign, these functions carry an increasing operational burden. They are expected to support AI adoption while continuing to meet performance and cost targets - an imbalance that creates friction and slows progress.

We see this dynamic repeatedly in large institutions: the visible change happens in the “blue” areas, but the stress builds in the “grey” ones.

Ignoring these foundational functions is one of the most common reasons why AI strategies stall after initial success.

Why This Matters

McKinsey’s updated framework provides a clear blueprint for the next phase of AI in financial services. It captures four critical shifts:

- Agents instead of tools

- Orchestration instead of analytics

- Reuse instead of reinvention

- Domains instead of pilots

Adapt processes and controls for emerging risks such as data leakage, lineage drift, or explainability.

Those are the layers that will determine whether a bank becomes truly AI-native or remains a collection of disconnected experiments.

AI is no longer a feature of the bank. It is becoming the architecture of the bank.

The winners will be those who design for orchestration, not addition - building systems where every function, even the legacy ones, can participate in intelligence at scale.